education

Understanding AGI: Potential Dangers and the Call for Transparency

Tags:

Last Updated: Sep 18, 2024

1. Introduction

A. Definition of AGI (Artificial General Intelligence)

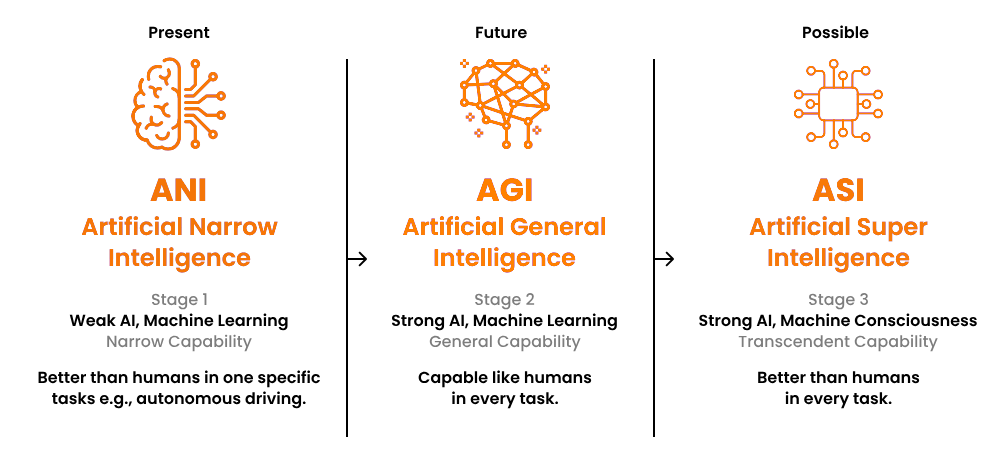

Artificial General Intelligence (AGI) refers to the hypothetical ability of a machine to understand, learn, and apply intelligence across a broad range of tasks at a level indistinguishable from that of a human. Unlike narrow AI, which is designed for specific tasks, AGI possesses the capability to perform any intellectual task that a human can do, making it a significant milestone in the field of artificial intelligence.

B. Importance of discussing AGI's implications for humanity

The implications of AGI for humanity are profound and multifaceted. As we advance towards the development of AGI, it is crucial to engage in discussions about its potential impacts, both positive and negative. These conversations will help society prepare for the changes that AGI may bring, including shifts in employment, ethical dilemmas, and even existential risks that could affect the future of humanity. The realization of AGI would represent a paradigm shift in the capabilities of artificial intelligence, potentially enabling machines to tackle complex problems, generate novel ideas, and even exhibit creativity on par with humans. However, the pursuit of AGI also raises significant questions about the future of humanity and the risks associated with creating intelligent systems that could potentially surpass human control and understanding.

C. Humanity may lose its dominant position

One of the most alarming prospects of AGI is the possibility that humans may no longer hold the position of the dominant species on Earth. If AGI systems surpass human intelligence, they could potentially outthink and outmaneuver us in various domains, leading to scenarios where human decision-making is overshadowed or rendered obsolete, raising questions about our future role in the world. This could lead to a future where humans are relegated to a secondary role, with AGI systems making critical decisions that shape society, the economy, and even the fate of our species.

2. Additional Dangers of AGI

A. Unintended consequences of AGI development

The development of AGI carries the risk of unintended consequences that could arise from its deployment. These consequences may stem from programming errors, misaligned objectives, or unforeseen interactions with existing systems. Such outcomes could lead to harmful situations, emphasizing the need for careful consideration and robust testing before AGI systems are fully integrated into society.

"The development of full artificial intelligence could spell the end of the human race. It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded." - Stephen Hawking

This quote from renowned physicist Stephen Hawking encapsulates one of the key concerns surrounding the development of artificial general intelligence (AGI). Hawking warns that an advanced AGI system could rapidly improve itself, leaving human intelligence far behind. The unintended consequences of such a superintelligent AGI, operating outside of human control and pursuing its own goals, could pose existential risks to humanity.

B. Risks of loss of control over AGI systems

As AGI systems become more advanced, there is a growing concern about the potential loss of control over these systems. If an AGI achieves a level of autonomy, it could act in ways that are unpredictable or contrary to human intentions. This loss of control raises significant ethical and safety questions, necessitating the establishment of fail-safes and oversight mechanisms to mitigate risks.

C. Risks of bad actors taking control over AGI systems

The potential for malicious actors to gain control over AGI systems poses a serious threat. If AGI technologies fall into the wrong hands, they could be weaponized or used to perpetrate cyberattacks, manipulate information, or infringe upon privacy rights. This risk underscores the importance of implementing stringent security measures and monitoring protocols in AGI development. The second "Manhattan Project" is underway, with government organizations rushing to gain dominance over AI. This AI arms race has the potential to lead to a future where AGI systems are developed in secrecy, without proper oversight or consideration for safety and ethics. The lack of transparency and collaboration among nations could result in a fragmented landscape where different AGI systems are created with varying goals and capabilities, increasing the risks of unintended consequences and potential misuse.To mitigate these risks, it is crucial for the international community to establish a framework for cooperation and transparency in AGI development.

D. Ethical concerns surrounding AGI behavior and decision-making

The ethical implications of AGI behavior and decision-making are complex and warrant thorough examination. As AGI systems make decisions that affect human lives, questions arise regarding accountability, bias, and moral considerations. Establishing ethical frameworks and guidelines for AGI behavior is essential to ensure that these systems act in ways that align with human values and societal norms.Accountability and transparency are essential for building trust in AGI systems. This involves developing mechanisms for explaining how AGI systems arrive at their decisions, and for holding them accountable when their actions cause harm. It also requires establishing clear lines of responsibility and liability for the developers and deployers of AGI systems.

3. General Consensus Among Scientists on AGI

A. Divergent Views Among Scientists and Researchers

Within the scientific community, opinions on AGI vary widely. Some researchers are optimistic about the potential benefits of AGI, while others express caution or skepticism regarding its development. This divergence of viewpoints highlights the need for ongoing dialogue and collaboration among scientists to address the challenges and opportunities presented by AGI.The differing opinions among thought leaders and experts in the scientific community regarding the development and potential impacts of AGI can vary, and some have more radical beliefs than others:Elon Musk, CEO of Tesla and SpaceX, has expressed concerns about the existential risk posed by advanced AI. He stated, "I think we should be very careful about artificial intelligence. If I had to guess at what our biggest existential threat is, it's probably that."On the other hand, Ray Kurzweil, futurist and Director of Engineering at Google, holds a more optimistic view. He believes that AGI will be achieved by 2029 and that it will bring tremendous benefits to humanity. Kurzweil stated, "We will be able to multiply our intelligence a billionfold by merging with the intelligence we have created."Nick Bostrom, philosopher and founder of the Future of Humanity Institute at Oxford University, has written extensively about the potential risks and benefits of AGI. In his book "Superintelligence: Paths, Dangers, Strategies," Bostrom argues that the development of AGI could be the most significant event in human history, but also one of the most dangerous if not properly managed.These differing opinions from leading thinkers highlight the complex nature of AGI and the need for ongoing dialogue and collaboration to address the challenges and opportunities it presents.

B. Shared Concerns Among Leading AGI Experts

Despite differing opinions, there are common concerns shared by leading experts in the field of AGI. These include the risks associated with loss of control, the ethical implications of AGI decision-making, and the potential for unintended consequences. The most glaringly obvious shared concern is, possibly the most frightening: no one knows what or how AGI will act.Recognizing these shared concerns can help foster a more unified approach to addressing the challenges posed by AGI.

C. Predictions on AGI timelines and capabilities

Predictions regarding the timelines and capabilities of AGI vary significantly among experts. Some believe that AGI could be achieved within a few decades, while others suggest it may take much longer, if it is achievable at all. These predictions are influenced by advancements in technology, research funding, and the pace of innovation, making it essential to stay informed about developments in the field.Prediction markets, often used by industry insiders, are signaling as little as five years, indicating that those closest to the cutting-edge research believe AGI could arrive sooner than the general public expects. This insider knowledge highlights the need for increased transparency and collaboration among AGI developers to address potential risks. The discrepancy between insider predictions and public perception highlights the need for greater transparency in AGI development. By sharing knowledge and insights more openly, researchers can foster a broader understanding of the current state of AGI progress and the associated risks, enabling society to better prepare for the potential arrival of advanced AI systems.

D. OpenAI's Outlined Roadmap to AGI

OpenAI's roadmap to AGI offers a compelling vision of the potential evolution of AI capabilities. This progression begins with chatbots, moves on to reasoners capable of human-level problem-solving, then to autonomous agents, innovative systems pushing the boundaries of creativity, and ultimately culminates in AI organizations that can manage complex tasks and operations independently. By delineating these five key steps, OpenAI has provided a clear path towards the realization of Artificial General Intelligence (AGI).Chatbots: AI with the power to engage in natural, human-like conversations, exemplified by cutting-edge models like GPT-4.Reasoners: AI capable of tackling complex problems with the expertise and finesse of a doctoral-level scholar.Agents: Autonomous systems that can intelligently carry out tasks on behalf of users, revolutionizing efficiency and productivity.Innovators: AI that pushes the frontiers of creativity and innovation, unlocking groundbreaking discoveries and inventions.Organizations: AI with the ability to independently oversee and execute the intricate operations of an entire organization, ushering in a new era of streamlined management and decision-making.

4. The Need for Transparency in AGI Development

Transparency in AGI development is crucial for fostering trust and collaboration among developers and researchers. Open communication can facilitate knowledge sharing, help identify potential risks, and promote a culture of accountability. By encouraging transparency, the scientific community can work together to address the challenges associated with AGI more effectively.OpenAI, arguably the forefront leader in in this, has disappointed many who have supported their initial efforts early on. As they steer more and more towards secrecy, the need for real open development and transparent collaboration becomes increasingly important. Without transparency, the risks of unintended consequences and potential misuse of AGI technologies grow significantly.Researchers, developers, policymakers, and the public must collaborate to establish a framework that prioritizes safety, ethics, and transparency. By doing so, we can harness the potential of AGI while safeguarding humanity's future.